https://github.com/PacktPublishing/Machine-Learning-Algorithms

一樣先導入套件,但是這次只用舊工具算數學(綽號一樣),但用不同工具來畫畫。

import numpy as np

用Jupyter Notebook的話,要先載入graphviz,它是一種樹工具,再用內建函式export_graphviz()。

pip install graphviz

from sklearn.tree import export_graphviz

再來,用seed()隨機產生整數的亂數後,設定樣本數500,建立有3個特徵及類別的樣本集。

from sklearn.datasets import make_classification np.random.seed(1000) nb_samples = 500 X, Y = make_classification(n_samples=nb_samples, n_features=3, n_informative=3, n_redundant=0, n_classes=3, n_clusters_per_class=1)

接著用預設的吉尼(Gini)不純度來分類,Gini是用來判斷分類的乾不乾淨(這批純不純![]() ),數值越小越純[價格越高(誤)]。

),數值越小越純[價格越高(誤)]。

經歷建立決策樹分類器後,交叉驗證準確率,大概97%。

from sklearn.tree import DecisionTreeClassifier from sklearn.model_selection import cross_val_score dt = DecisionTreeClassifier() dt_scores = cross_val_score(dt, X, Y, scoring='accuracy', cv=10) print('Decision tree score: %.3f' % dt_scores.mean()) # Decision tree score: 0.972

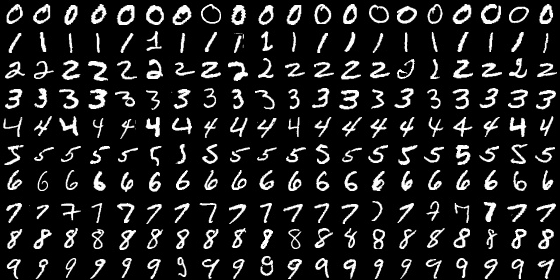

對MNIST數字集使用網格搜尋以及最常見的參數,得到大概82%準確率。

from sklearn.model_selection import GridSearchCV param_grid = [{ 'criterion': ['gini', 'entropy'], 'max_features': ['auto', 'log2', None], 'min_samples_split': [2, 10, 25, 100, 200], 'max_depth': [5, 10, 15, None] }] gs = GridSearchCV(estimator=DecisionTreeClassifier(), param_grid=param_grid,scoring='accuracy', cv=10, n_jobs=multiprocessing.cpu_count()) gs.fit(digits.data, digits.target) print(gs.best_estimator_) # DecisionTreeClassifier() print('Decision tree score: %.3f' % gs.best_score_) # Decision tree score: 0.822